Coronavirus: Facebook alters virus action after damning misinformation report

BBC

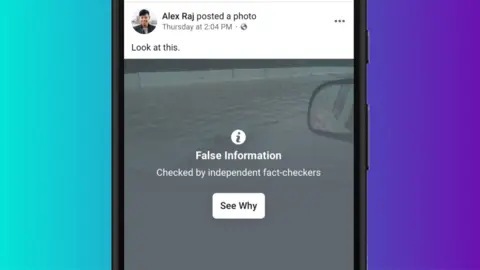

BBCFacebook is changing how it treats Covid-19 misinformation after a damning report into its handling of the virus.

Users who have read, watched or shared false coronavirus content will receive a pop-up alert urging them to go the World Health Organisation's website.

A study had indicated Facebook was frequently failing to clamp down on false posts, particularly when they were in languages other than English.

Facebook said the research did not reflect the work it had done recently.

The California tech firm says it will start showing the messages at the top of news feeds "in the coming weeks".

A spokesman for Facebook said it did not recognise the alerts as a being a change of policy, but instead told the BBC they were "operational changes to the platform".

Truth redirect

The messages will direct people to a World Health Organisation webpage where myths are debunked.

Facebook

FacebookA Facebook spokesman said the move will "connect people who may have interacted with harmful misinformation about the virus with the truth from authoritative sources, in case they see or hear these claims again off of Facebook".

The changes have been prompted by a major study of misinformation on the platform across six languages by Avaaz, a crowdfunded activist group.

Researchers say millions of Facebook users continue to be exposed to coronavirus misinformation, without any warning on the platform.

The group found some of the most dangerous falsehoods had received hundreds of thousands of views, including claims like "black people are resistant to coronavirus" and "Coronavirus is destroyed by chlorine dioxide".

Avaaz researchers analysed a sample of more than 100 pieces of Facebook coronavirus misinformation on the website's English, Spanish, Portuguese, Arabic, Italian and French versions.

The research found that:

- It can take the company up to 22 days to issue warning labels for coronavirus misinformation, with delays even when Facebook partners had flagged the harmful content for the platform.

- 29% of false content in the sample was not labelled at all on the English language version of the website

- It is worse in some other languages, with 68% of Italian-language content, 70% of Spanish-language content, and 50% of Portuguese-language content not labelled as false

- Facebook's Arabic language efforts are more successful, with only 22% of the sample of misleading posts remaining unlabelled.

Facebook says it is continuing to expand its multilingual network of fact-checkers issuing grants and partnering with trusted organisations in more than 50 languages.

Fadi Quran, Campaign Director at Avaaz said: "Facebook sits at the epicenter of the misinformation crisis.

"But the company is turning a critical corner today to clean up this toxic information ecosystem, becoming the first social media platform to alert all users who have been exposed to coronavirus misinformation, and directing them to life-saving facts."

Facebook

FacebookOne of the falsehoods that researchers tracked was the claim that people could rid the body of the virus by drinking a lot of water and gargling with salt or vinegar. The post was shared more than 31,000 times before eventually being taken down after Avaaz flagged it to Facebook.

However, more than 2,600 clones of the post remain on the platform, with nearly 100,000 interactions and most of these cloned posts have no warning labels from Facebook.

Mark Zuckerberg, Facebook founder and chief executive, defended his company's work in an online post saying: "On Facebook and Instagram, we've now directed more than two billion people to authoritative health resources via our Covid-19 Information Center and educational pop-ups, with more than 350 million people clicking through to learn more.

"If a piece of content contains harmful misinformation that could lead to imminent physical harm, then we'll take it down. We've taken down hundreds of thousands of pieces of misinformation related to Covid-19, including theories like drinking bleach cures the virus or that physical distancing is ineffective at preventing the disease from spreading. For other misinformation, once it is rated false by fact-checkers, we reduce its distribution, apply warning labels with more context and find duplicates."

Mr Zuckerberg insists that warning pop-ups are working, with 95% of users choosing to not view the content when presented with the labels.

"I think this latest step is a good move from Facebook and we've seen a much more proactive stance to misinformation in this pandemic than during other situations like the US elections", says Emily Taylor, associate fellow at Chatham House and an expert at social media misinformation.

"We don't know if it will make a huge difference but it's got to be worth a try because the difference between misinformation in a health crisis and an election is literally that lives are at stake," she said.